“Musification” of brain signals, from Stanford

A nice notice about some fascinating recent research. Of course, we’re immediately curious about what the resulting music would look like in rhythmic terms converted to WaveDNA Music Molecules. At first glance, it seems likely that there might be an identifiable ‘genome’ of BarForms and BeatForms for brain seizure activity. The tricky part would be converting the apparent tempo changes to MIDI in some accurate way, but it seems like something possible in principle. In any case, the findings are interesting and exciting from many perspectives.

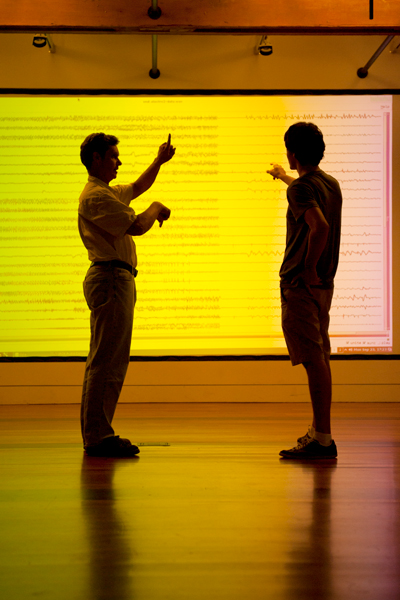

Josef Parvizi was enjoying a performance by the Kronos Quartet when the idea struck. The musical troupe was midway through a piece in which the melodies were based on radio signals from outer space, and Parvizi, a neurologist at Stanford Medical Center, began wondering what the brain’s electrical activity might sound like set to music.

He didn’t have to look far for help. Chris Chafe, a professor of music research at Stanford, is one of the world’s foremost experts in “musification,” the process of converting natural signals into music. One of his previous works involved measuring the changing carbon dioxide levels near ripening tomatoes and converting those changing levels into electronic performances.

Parvizi, an associate professor, specializes in treating patients suffering from intractable seizures. To locate the source of a seizure, he places electrodes in patients’ brains to create electroencephalogram (EEG) recordings of both normal brain activity and a seizure state.

He shared a consenting patient’s EEG data with Chafe, who began setting the electrical spikes of the rapidly firing neurons to music. Chafe used a tone close to a human’s voice, in hopes of giving the listener an empathetic and intuitive understanding of the neural activity.

Upon a first listen, the duo realized they had done more than create an interesting piece of music. [Listen to the audio here]

“My initial interest was an artistic one at heart, but, surprisingly, we could instantly differentiate seizure activity from non-seizure states with just our ears,” Chafe said. “It was like turning a radio dial from a static-filled station to a clear one.”

If they could achieve the same result with real-time brain activity data, they might be able to develop a tool to allow caregivers for people with epilepsy to quickly listen to the patient’s brain waves to hear whether an undetected seizure might be occurring.

Parvizi and Chafe dubbed the device a “brain stethoscope.”

The sound of a seizure

The EEGs Parvizi conducts register brain activity from more than 100 electrodes placed inside the brain; Chafe selects certain electrode/neuron pairings and allows them to modulate notes sung by a female singer. As the electrode captures increased activity, it changes the pitch and inflection of the singer’s voice.

Before the seizure begins – during the so-called pre-ictal stage – the peeps and pops from each “singer” almost synchronize and fall into a clear rhythm, as if they’re following a conductor, Chafe said.

In the moments leading up to the seizure event, though, each of the singers begins to improvise. The notes become progressively louder and more scattered, as the full seizure event occurs (the ictal state). The way Chafe has orchestrated his singers, one can hear the electrical storm originate on one side of the brain and eventually cross over into the other hemisphere, creating a sort of sing-off between the two sides of the brain.

After about 30 seconds of full-on chaos, the singers begin to calm, trailing off into their post-ictal rhythm. Occasionally, one or two will pipe up erratically, but on the whole, the choir sounds extremely fatigued.

It’s the perfect representation of the three phases of a seizure event, Parvizi said.

Read the full article from Stanford News written by Bjorn Carey here.

Leave a Reply